Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog

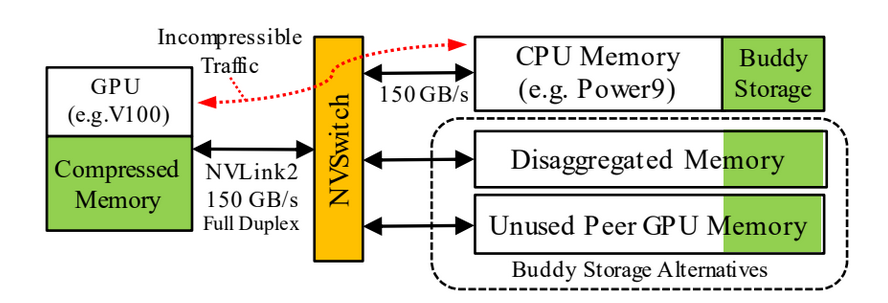

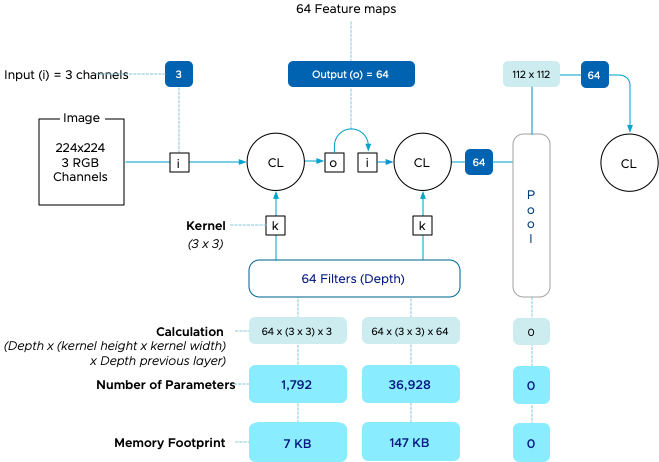

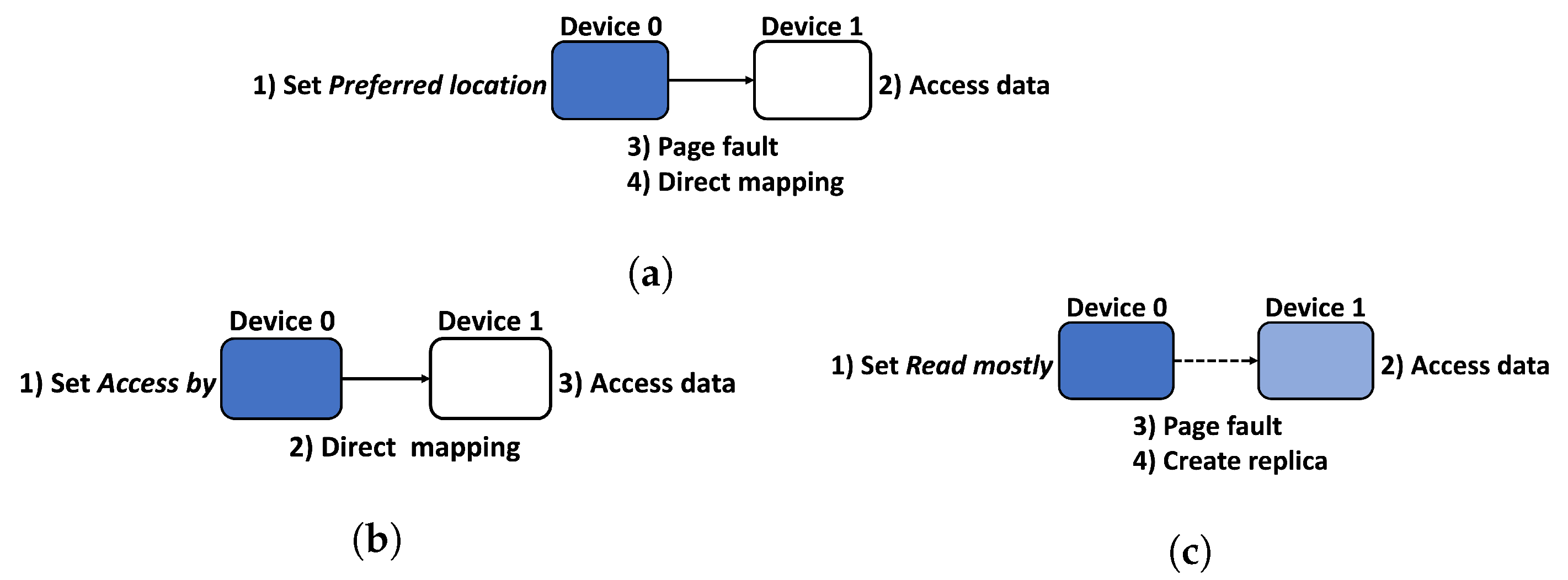

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training

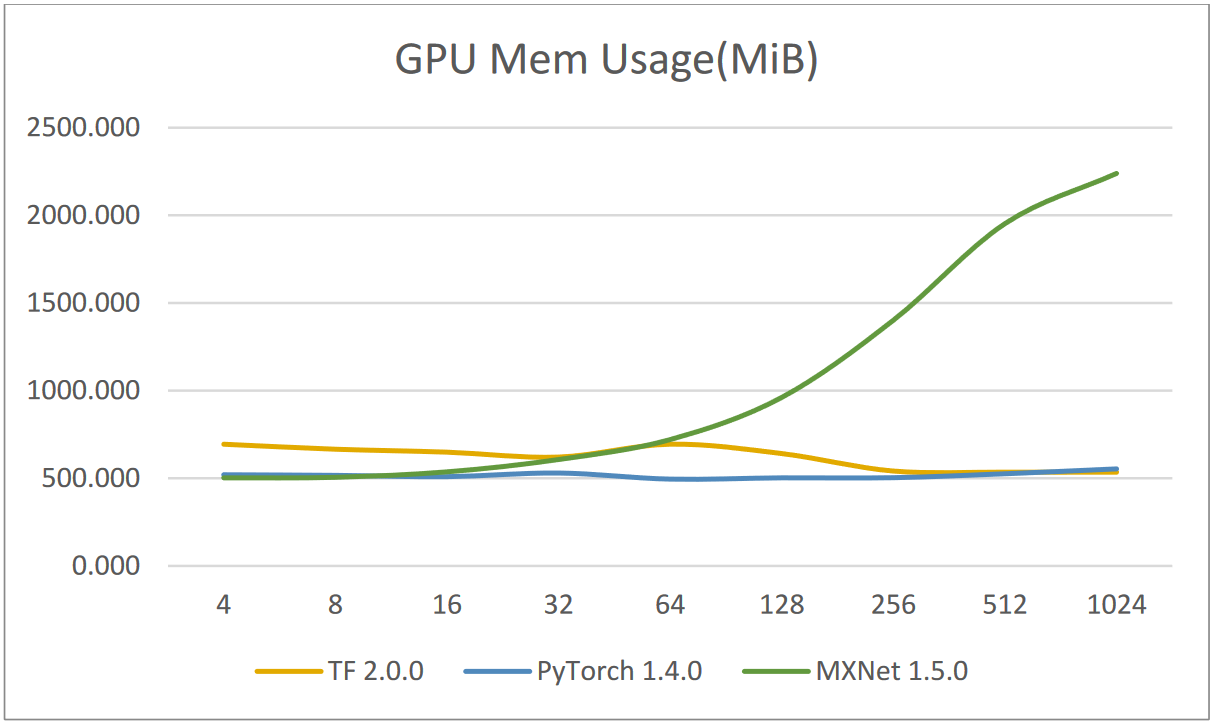

Machine Learning Frameworks Interoperability, Part 2: Data Loading and Data Transfer Bottlenecks | NVIDIA Technical Blog

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training

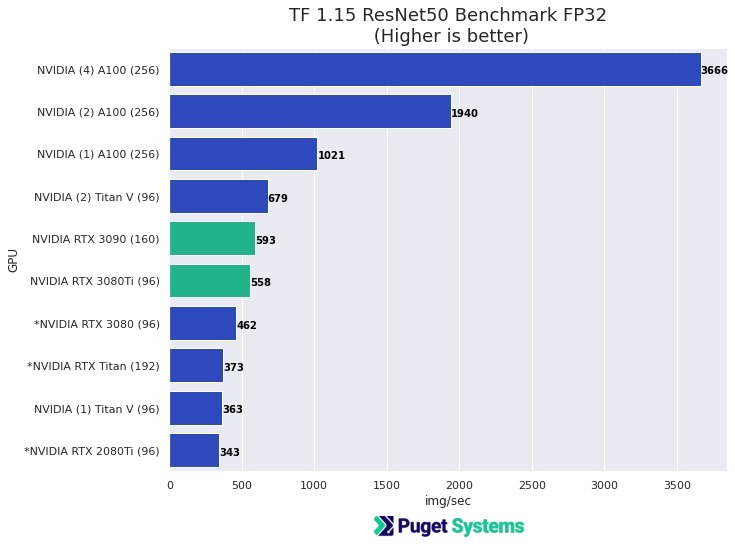

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs 32GB -- 1080Ti vs Titan V vs GV100 | Puget Systems

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training